Sandia fog facility enables technology testing, foundational research

Self-flying drones and autonomous taxis that can safely operate in fog may sound futuristic, but new research at Sandia’s fog facility is bringing the future closer.

Fog can make travel by water, air and land hazardous when it becomes hard for both people and sensors to detect objects. Researchers at Sandia’s fog facility are addressing that challenge through new optical research in computational imaging and by partnering with NASA researchers working on Advanced Air Mobility, Teledyne FLIR and others to test sensors in customized fog that can be measured and repeatedly produced on demand.

“It’s important to improve optical sensors to better perceive and identify objects through fog to protect human life, prevent property damage and enable new technologies and capabilities,” said Jeremy Wright, optical engineer.

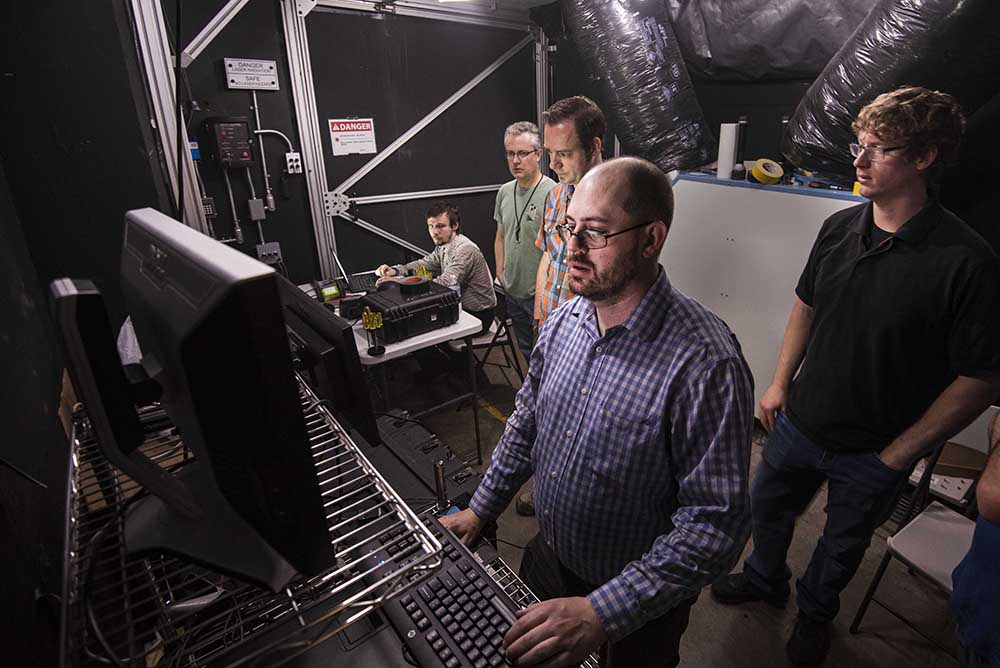

Built in 2014, Sandia’s fog chamber is 180 feet long, 10 feet tall and 10 feet wide. The chamber is lined with plastic sheeting to entrap the fog.

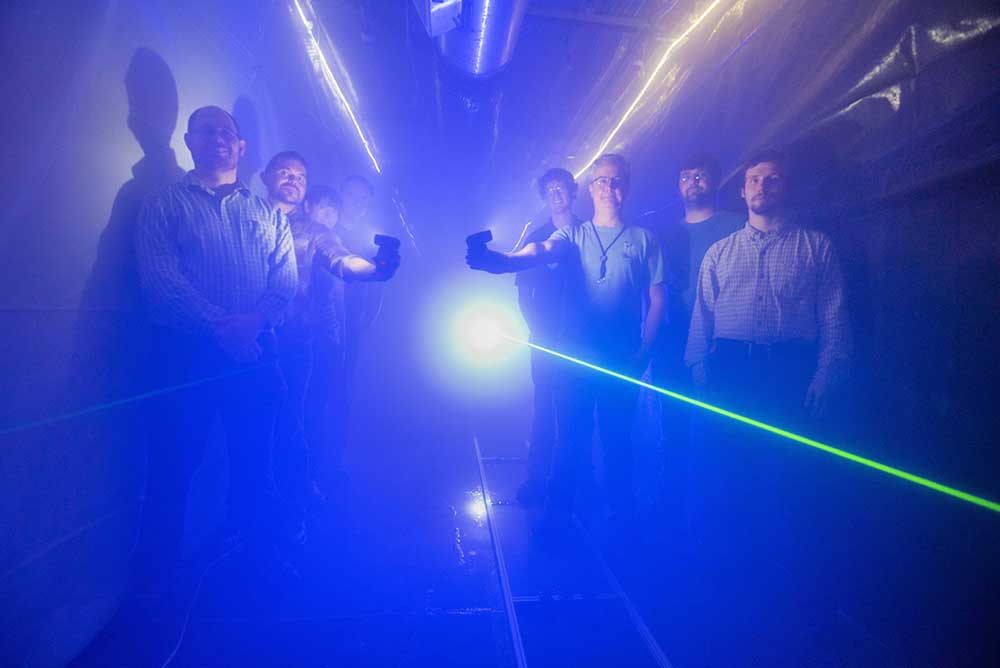

When the team begins a test, 64 nozzles hiss as they spray a custom mixture of water and salt. As the spray spreads, the humidity builds and thick fog forms. Soon, an observer inside won’t be able to see the walls, ceiling or entrance through the aerosol, and people and objects a few feet away will be obscured or completely hidden.

Sandia’s researchers carefully measure properties of fog over time to understand how it forms and changes. By adjusting environmental parameters, the researchers can change the fog properties to better match naturally occurring fog.

“Our team can measure and completely characterize the fog that we produce at the facility, and we can repeatedly generate similar fog on different days,” said Andres Sanchez, chemical engineer. “Having consistent and measurable conditions is important when we’re testing how sensors perform in fog.”

Enabling safe all-weather operations for self-flying vehicles, planes and drones

Researchers from NASA’s Ames Research Center recently visited Sandia to perform a series of experiments to test how commercially available sensors perceive obstacles in fog. The Revolutionary Aviation Mobility group is part of the NASA Transformational Tools and Technologies project.

“We tested perception technologies that might go into autonomous air vehicles,” said Nick Cramer, the lead NASA engineer for this project. “We want to make sure these vehicles are able to operate safely in our airspace. This technology will replace a pilot’s eyes, and we need to be able to do that in all types of weather.”

The team set up a stationary drone in the chamber as a target and then tested various sensors to see how well they could perceive the drone in the fog.

“The fog chamber at Sandia National Laboratories is incredibly important for this test,” Cramer said. “It allows us to really tune in the parameters and look at variations over long distances. We can replicate long distances and various types of fog that are relevant to the aerospace environment.”

Cramer said one of the challenges of self-flying technology is that there would be a lot of small vehicles flying in close proximity.

“We need to be able to detect and avoid these small vehicles,” Cramer said. “The results of these tests will allow us to dig into what the current gaps in perception technology are to moving to autonomous vehicles.”

Fog facility helps prove technology

Teledyne FLIR has tested its own infrared cameras at Sandia’s fog facility to determine how well they detect and classify pedestrians and other objects. Chris Posch, automotive engineering director for Teledyne FLIR, said the cameras could be used to improve both the safety of today’s vehicles with advanced driver-assisted systems features such as automatic emergency breaking and autonomous vehicles of the future.

“Fog testing is very difficult to do in nature because it is so fleeting and there are many inherent differences typically seen in water droplet sizes, consistency and repeatability of fog or mist,” Posch said. “As the Sandia fog facility can repeatably create fog with various water content and size, the facility was critical in gathering the test data in a thorough scientific manner.”

Sandia and Teledyne FLIR conducted multiple performance tests with vehicle safety sensors including visible cameras, longwave infrared cameras, midwave infrared cameras, shortwave infrared cameras and lidar sensors.

Posch said the results showed that Teledyne FLIR’s longwave infrared cameras can accurately detect and classify pedestrians and other objects in most fog, where visible cameras are challenged.

New research to detect, locate and image objects through fog

A team of Sandia researchers recently published a paper in Optics Express describing current results from a three-year project to use computational imaging and the science behind how light propagates and scatters in fog to create algorithms that enable sensors to detect, locate and image objects in fog.

“Current methods to see through fog and with scattered light are costly and can be limited,” said Brian Bentz, electrical engineer and project lead. “We are using what we know about how light propagates and scatters in fog to improve sensing and situational awareness capabilities.”

Brian said the team has modeled how light propagates through fog to an object and to a detector — usually a pixel in a camera — and then inverted that model to estimate where the light came from and characteristics of the object. By changing the model, this approach can be used with either visible or thermal light.

Brian says the team has used the model to detect, locate and characterize objects in fog and will be working on imaging objects during the project’s final year. The team has been using Sandia’s fog facility for experimental validations.

Parallel to this research, the Sandia team created two bench-top fog chambers to support a project at Academic Alliance partner, West Lafayette, Indiana-based Purdue University.

Sandia is studying and characterizing the fog generated by its new bench-top fog chamber, while Purdue is using its twin system to perform experiments.

Purdue professor Kevin Webb is leading research to develop an imaging technology based on how light interferes with itself when it scatters and using those effects to detect objects.

The Sandia team has recently presented its work at SPIE and CLEO. The computational imaging and academic alliance research was funded by Laboratory Directed Research and Development.