Big Data

Automated Alt Text

- LAVIS’s BLIP software: https://github.com/salesforce/LAVIS

- “Evaluating the effectiveness of automatic image captioning for web accessibility” by Leotta, Mori, and Ribauto

Audio Captioning

Interpretation vs. Captioning

- Audio visualization

- Truskinger, A., Brereton, M., & Roe, P. (2018, October). Visualizing five decades of environmental acoustic data. In 2018 IEEE 14th International Conference on e-Science (e-Science) (pp. 1-10). IEEE.

- Cottingham, M. D., & Erickson, R. J. (2020). Capturing emotion with audio diaries. Qualitative Research, 20(5), 549-564.

- Visual sonification

- Ali, S., Muralidharan, L., Alfieri, F., Agrawal, M., & Jorgensen, J. (2020). Sonify: making visual graphs accessible.” In Human Interaction and Emerging Technologies: Proceedings of the 1st International Conference on Human Interaction and Emerging Technologies (IHIET 2019), August 22-24, 2019, Nice, France (pp. 454-459). Springer International Publishing.

- Sawe, N., Chafe, C., & Treviño, J. (2020). Using data sonification to overcome science literacy, numeracy, and visualization barriers in science communication. Frontiers in Communication, 5, 46.

- Tactile vibration responses

- Yoshioka, T., Bensmaia, S. J., Craig, J. C., & Hsiao, S. S. (2007). Texture perception through direct and indirect touch: An analysis of perceptual space for tactile textures in two modes of exploration. Somatosensory & motor research, 24(1-2), 53-70.

- Otake, K., Okamoto, S., Akiyama, Y., & Yamada, Y. (2022). Tactile texture display combining vibrotactile and electrostatic-friction stimuli: Substantial effects on realism and moderate effects on behavioral responses. ACM Transactions on Applied Perception, 19(4), 1-18.

Raw/Original Data Components

|

What can be done?

- Big Data

- Li, J., Li, D., Savarese, S., & Hoi, S. (2023, July). Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In International conference on machine learning (pp. 19730-19742). PMLR.

- Leotta, M., Mori, F., & Ribaudo, M. (2023). Evaluating the effectiveness of automatic image captioning for web accessibility. Universal access in the information society, 22(4), 1293-1313.

- Mei, X., Liu, X., Plumbley, M. D., & Wang, W. (2022). Automated audio captioning: An overview of recent progress and new challenges. EURASIP journal on audio, speech, and music processing, 2022(1), 26.

- Audio visualization

- Truskinger, A., Brereton, M., & Roe, P. (2018, October). Visualizing five decades of environmental acoustic data. In 2018 IEEE 14th International Conference on e-Science (e-Science) (pp. 1-10). IEEE.

- Cottingham, M. D., & Erickson, R. J. (2020). Capturing emotion with audio diaries. Qualitative Research, 20(5), 549-564.

- Visual sonification

- Ali, S., Muralidharan, L., Alfieri, F., Agrawal, M., & Jorgensen, J. (2020). Sonify: making visual graphs accessible.” In Human Interaction and Emerging Technologies: Proceedings of the 1st International Conference on Human Interaction and Emerging Technologies (IHIET 2019), August 22-24, 2019, Nice, France (pp. 454-459). Springer International Publishing.

- Sawe, N., Chafe, C., & Treviño, J. (2020). Using data sonification to overcome science literacy, numeracy, and visualization barriers in science communication. Frontiers in Communication, 5, 46.

- Tactile vibration responses

- Yoshioka, T., Bensmaia, S. J., Craig, J. C., & Hsiao, S. S. (2007). Texture perception through direct and indirect touch: An analysis of perceptual space for tactile textures in two modes of exploration. Somatosensory & motor research, 24(1-2), 53-70.

- Otake, K., Okamoto, S., Akiyama, Y., & Yamada, Y. (2022). Tactile texture display combining vibrotactile and electrostatic-friction stimuli: Substantial effects on realism and moderate effects on behavioral responses. ACM Transactions on Applied Perception, 19(4), 1-18.

- Raw/Original Data Components

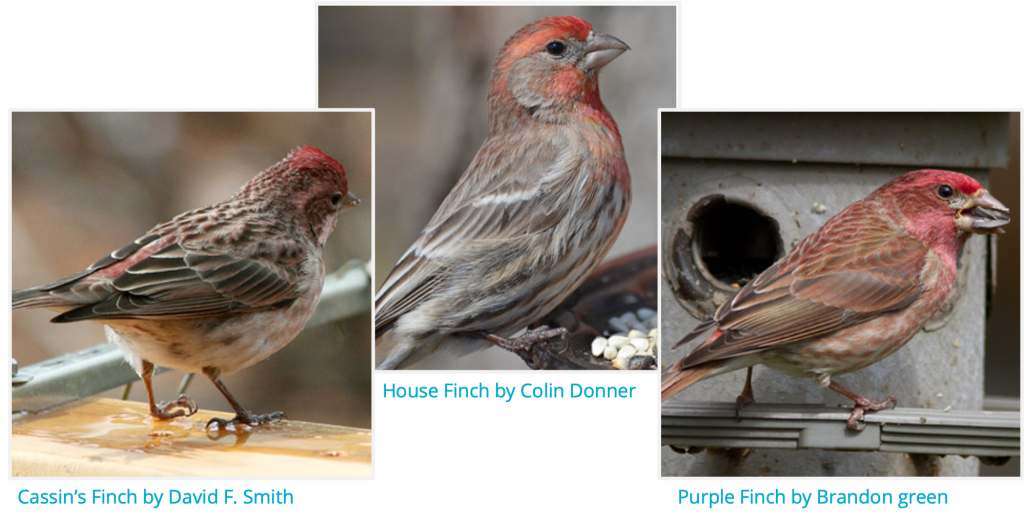

- Van Horn, G., Branson, S., Farrell, R., Haber, S., Barry, J., Ipeirotis, P., … & Belongie, S. (2015). Building a bird recognition app and large scale dataset with citizen scientists: The fine print in fine-grained dataset collection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 595-604).

- Gemmeke, J. F., Ellis, D. P., Freedman, D., Jansen, A., Lawrence, W., Moore, R. C., … & Ritter, M. (2017, March). Audio set: An ontology and human-labeled dataset for audio events. In 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 776-780). IEEE.

- “[HSD] Practise Toniic, ChrizZ, Feybi (Melbourne Shuffle Hamburg).” YouTube, uploaded by Feybi11 16 July, 2010 https://www.youtube.com/watch?v=4X2aUZFZlzc&t=31s

- Diment, A., Mesaros, A., Heittola, T., & Virtanen, T. (2017). TUT Rare sound events, Development dataset [Data set]. Zenodo. https://doi.org/10.5281/zenodo.401395

- Gaur, M., Alambo, A., Sain, J. P., Kursuncu, U., Thirunarayan, K., Kavuluru, R., Sheth, A., Welton, R., & Pathak, J. (2019, May 4). Reddit C-SSRS Suicide Dataset. The World Wide Web Conference. https://doi.org/10.5281/zenodo.2667859

- Michal Ptaszynski, Agata Pieciukiewicz, Pawel Dybala, Pawel Skrzek, Kamil Soliwoda, Marcin Fortuna, Gniewosz Leliwa, & Michal Wroczynski. (2022). Expert-annotated dataset to study cyberbullying in Polish language [Data set]. Zenodo. https://doi.org/10.5281/zenodo.7188178