Computing News

CCR Recent News

Sandia, along with Lawrence Livermore and Los Alamos National Laboratories, have entered a third round of collaborative research and development with Cerebras Systems through the Advanced Memory Technology program. Read the full...

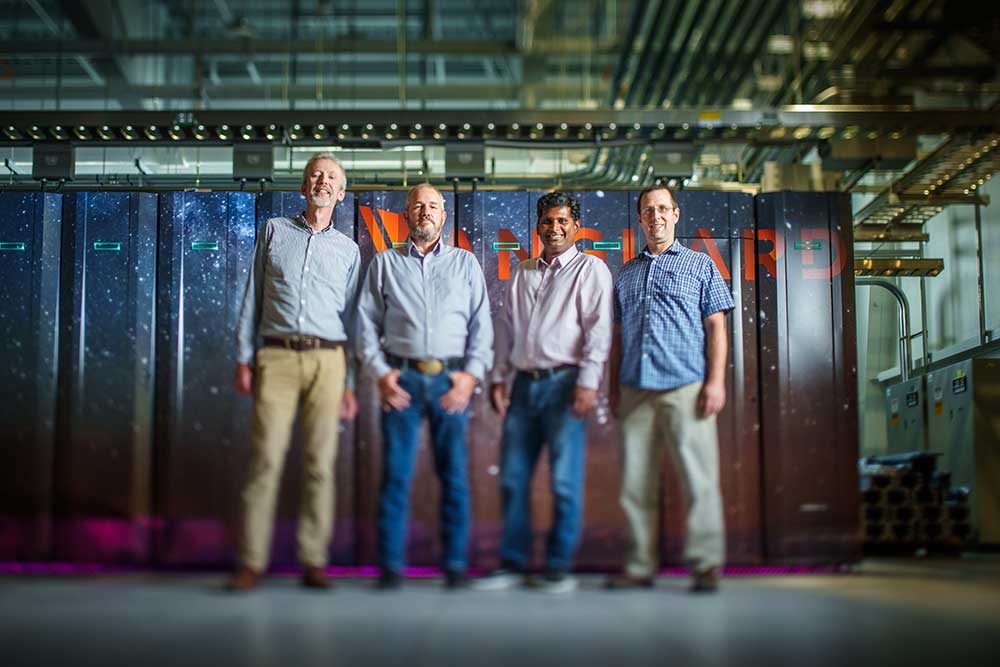

CCR researcher Siva Rajamanickam has been recognized by insideHPC as one of the “Vanguards of HPC-AI,” which spotlights emerging thought leaders and innovators in HPC- AI and scientific computing. Siva...

CCR researcher Christian Trott has been recognized by insideHPC as one of the “Vanguards of HPC-AI.” The insideHPC Vanguards series is intended to spotlight emerging thought leaders and innovators in...

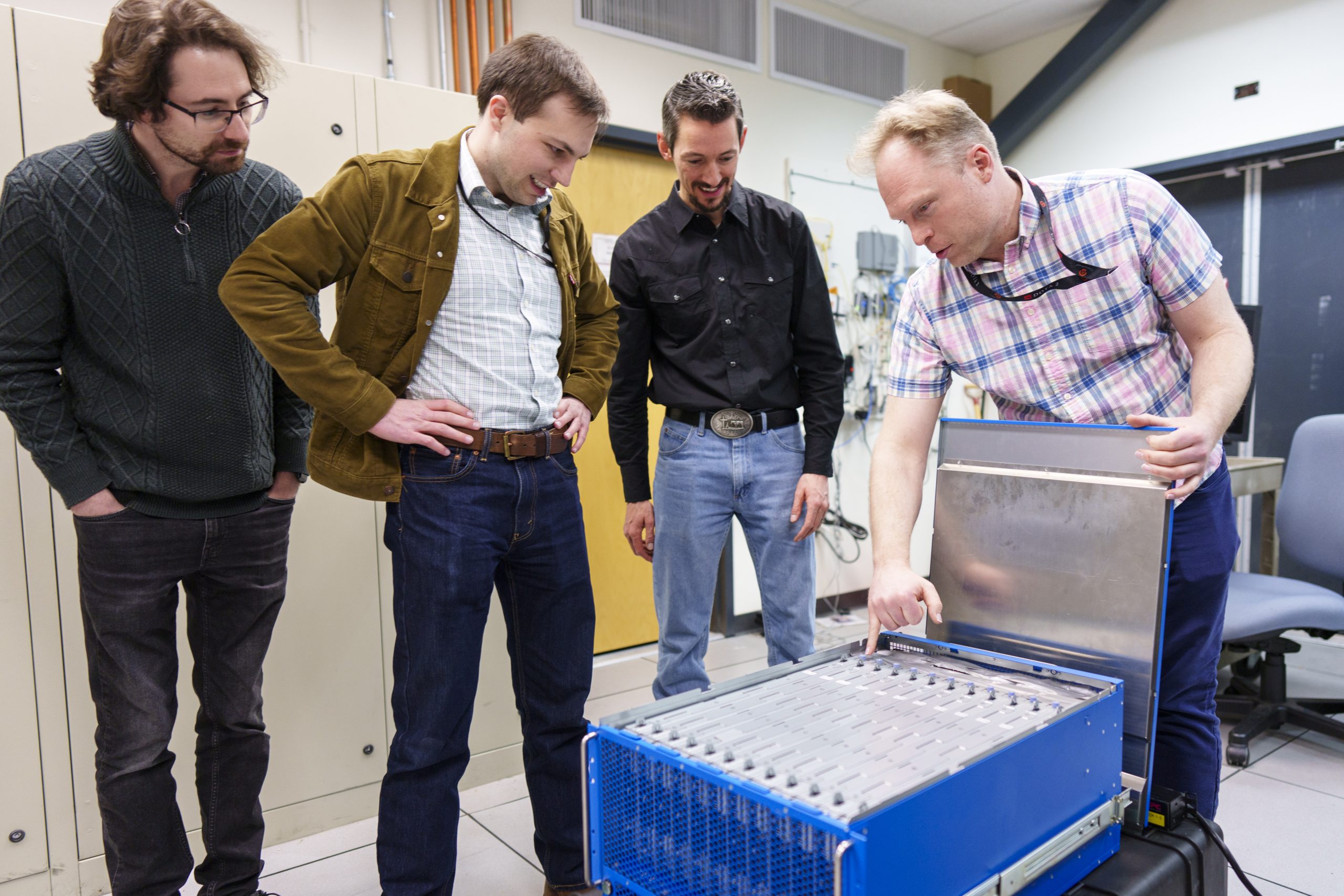

The Neural Exploration and Research Lab in Sandia’s Center for Computing Research has partnered with the German startup SpiNNcloud to deploy a large-scale SpiNNaker2 system. Each SpiNNaker2 chip contains 153...

Several members of Sandia's Center for Computing Research have been recognized for their outstanding contributions as part of the leadership team of the Exascale Computing Project (ECP). This ambitious 7-year...