What if a plain piece of paper could recognize objects in its vicinity? What if a window could change from transparent to opaque when paparazzi walk by? What if your self-driving car was suddenly much more energy efficient?

These and many more scenarios are exciting possibilities in the field of neuromorphic optical recognition performed by physical systems. This emerging field focuses on mimicking human object recognition processes by using passive synthetic components.

Most people are familiar with bar code readers, which use reflective laser lights to scan and input product codes into the cash register. And anyone with a newer smartphone is familiar with their evolving facial recognition technologies that work in normal light.

Sandia researchers posed the question: What if there was a fast, accurate and simple method for synthetic neuromorphic object recognition? Something that didn’t require the use of complex computer analysis, that could recognize not just a single face, but an entire world of different, complex objects, using normal daylight, or even any kind of light. Something that worked the same basic way the human eye and brain work. They decided to use the power of Sandia’s Chama HPC supercomputer to find out.

elements (eye lens or optical lenses) and directed onto a photodetector (rods and cones, or

a camera). This last process converts photons into electrons, which then are analyzed by

an algorithm (in the brain or in a computer) to reach a conclusion (“what a cute puppy!”).

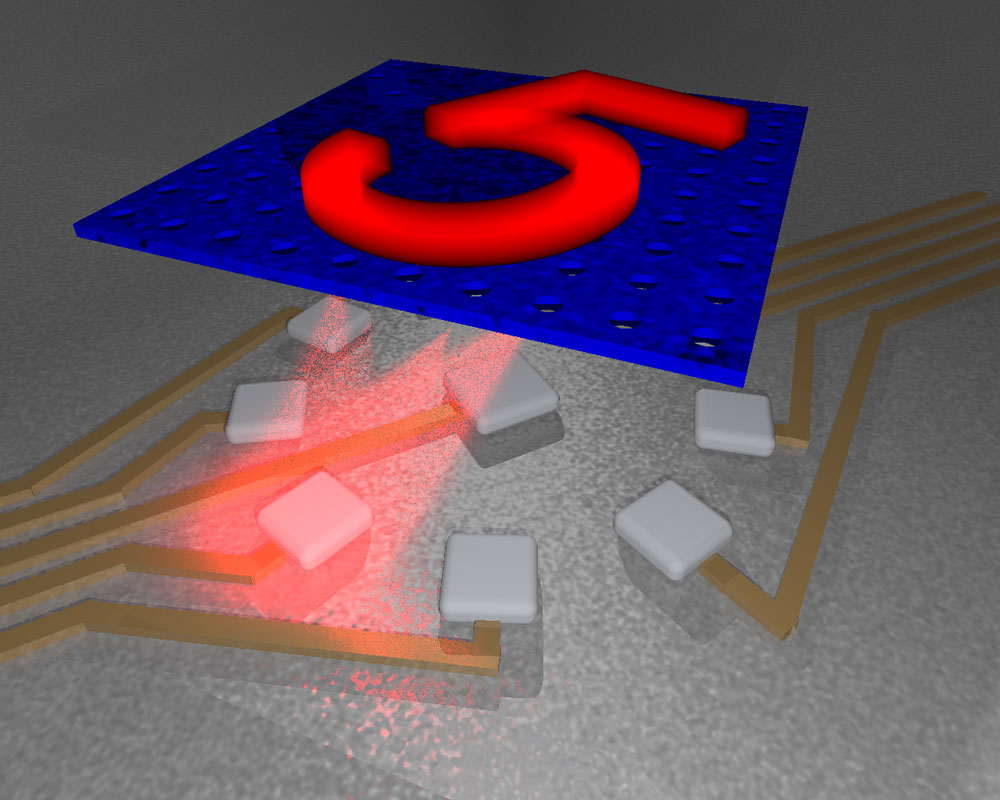

The new approach studied here is shown at right, where a passive material replaces

several steps in the process, enabling low energy consumption and fast processing.

Can it be done?

The most complex part of object recognition is the computation stage. Figure 1 depicts the mental analysis in a biological system and the algorithmic analysis in a synthetic system. Both systems require the optical data to be converted to electrical data so it can be received by the brain or a computer chip for analysis. In synthetic systems, the conversion and computation stages are resource intensive, which means they cost both energy and time. In the human brain, the same task is performed quickly and easily because the neurons and synapses are highly interconnected, and the brain can generalize after seeing only a few examples.

The Sandia team decided to determine whether structured optical materials could be taught to act more like the human brain and recognize classes of objects after learning by example. Rather than design, manufacture, and test experimental hardware components, they developed complex algorithms written in Fortran to determine the feasibility of their design, train a virtual “smart chip” and then optimize their design.

Instead of using the more traditional approach of converting optical data into electrical signals and then analyzing those signals with complex algorithms, the team decided to combine the entire process into a single trainable structured optical material, requiring no photon-to-electron conversion and no algorithmic analysis.

Design and simulation approach

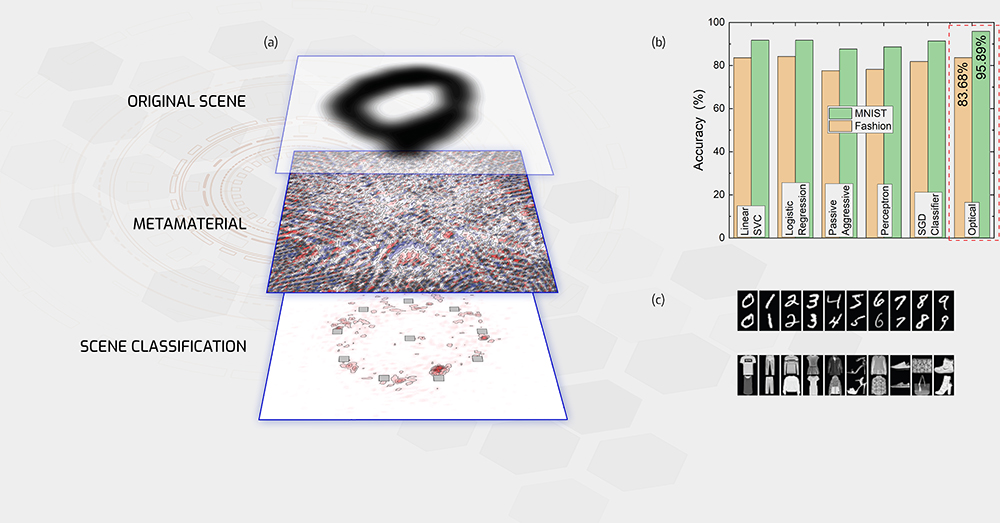

In the virtual world of supercomputer simulations, the team used Fortran to design a three-part system: An input light field corresponding to the scene of interest, a materials layer containing several thousand apertures that were filled to different thicknesses, and a detector layer consisting of 10 sensors, each sensor designated to recognize a class of objects (see Figure 2).

The team then trained the materials layer to recognize an object type, either a specific Arabic numeral (all of the numerals from 0-9) or a particular clothing type (all of 10 possible types), by illuminating the materials layer with thousands of example images from one of two standard image databases (MNIST for numerals, Fashion-MNIST for clothing types). As the images were projected onto the materials layer, the structure of each aperture was adjusted until it “learned” the structure needed to classify the images. Once the materials structure was fine-tuned in this process, the materials layer could perform inference, i.e., accurately recognize previously unseen images with high accuracy. The example shown in Figure 3a illustrates a light field in the shape of the handwritten digit “0” impinging on the trained material, leading to a light pattern on the sensor plane. The maximum intensity on the sensor plane is on the sensor designated to recognize a “0”. As seen in Figure 3b, this optical system far surpasses the performance of several well-known electronic linear classifiers.

Detailed analysis suggests that these systems could use orders of magnitude less energy while performing inference orders of magnitude faster. In contrast to other approaches for on-chip optical computing, which use waveguides that reduce the speed of light propagation, this system uses light propagation in free space: it works at the speed of light. Also, only a few low-energy sensors need to be monitored, compared to the millions of pixels in a camera.

To demonstrate the concept, the team relied heavily on Sandia’s HPC resources. They ran hundreds of single processor simulations to probe the complex design space for these systems. Furthermore, their recent work on incoherent light sources used about 1000 times more computational resources, requiring the development of a new multi-processor simulation approach. Because the researchers are directly including the physics of light propagation in their simulations, they did not rely on traditional software for machine learning but developed from scratch a Fortran simulation approach that allows them to fine-tune all aspects of the neuromorphic system.

trained material, and the light intensity on the output plane containing the 10 sensors.

(b) Results on the MNIST and Fashion datasets show high performance exceeding that

of electronic linear classifiers. (c) Examples from the MNIST and Fashion-MNIST image

databases.

Future work

The team is currently testing their predictions with an experimental system they recently designed and built. This work opens up new possibilities for materials that can passively perform neuromorphic computing functions. While the current work focused on light fields, it could be broadened to analyze other fields such as thermal signatures, radio frequency signals, mechanical variations and chemical reactions.